Behind the Product: CloudStore Part 3 [The Advantages and Tradeoffs of CloudStore]

As with all technical projects, CloudStore involved a number of tradeoffs. In this final post, I’ll describe the advantages we gained and the challenges we face given our decisions along the way.

The advantages

CloudStore results in a few benefits to our users and our developers over building a web app using a more traditional way of calling the server to make the change, waiting for a response, and then updating the client’s state:

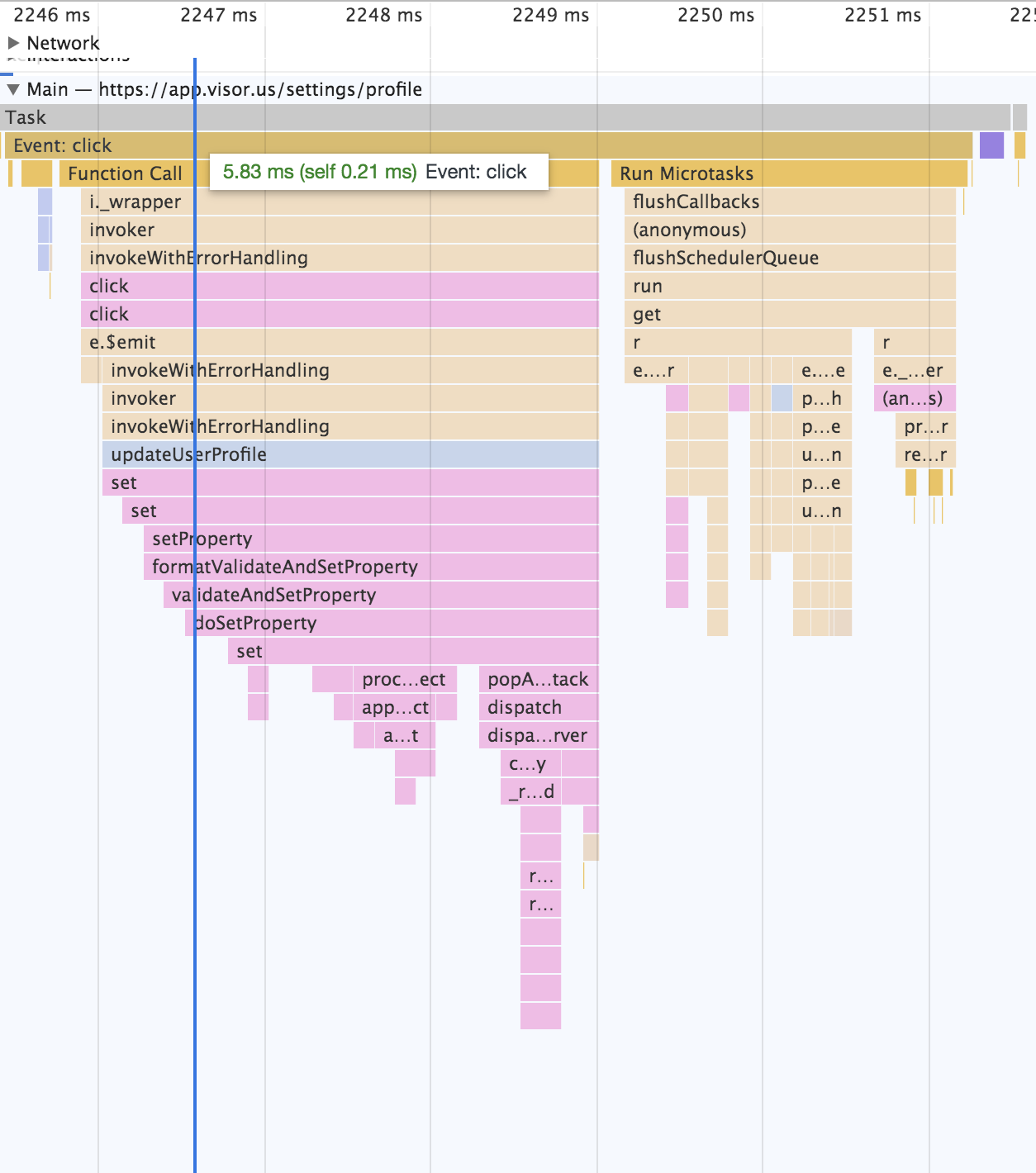

1) For our users, our UI is fast. Data mutations are made directly to the local datastore and reflected instantly in the UI. Synchronization with the server occurs asynchronously later. The user can keep working while the server processes the request. The only loading we need is for when we’re pulling new objects from the server into the subgraph or we’re running some server operation (such as a synchronization with Jira).

2) For both our users and our developers’ shared advantage, everything we build is real time collaborative by default. There’s no need to explicitly make state synchronized across client instances, so long as the UI is driven directly from the graph. At one point we added a threaded chat functionality with read receipts that worked out of the box.

3) For our developers, we’ve achieved exceptional productivity. To add features, there’s typically no server side code that needs to be written to support it. Once you think about problems in terms of the synchronized graph (which is an adaptation for some), technical challenges are usually easy to resolve.

Reflecting on the framework and challenges ahead

This framework build has left us generally happy with the result, but it’s still early for us. There was a period a few months into the framework, as it transitioned from proof of concept to mission critical, where I recognized just how much of a risk we were taking on by rolling our own datastore.

Picking a market and trying to build a product is risky enough. Adding technical risk on top of that is an even bigger bet. Throughout much of its development, however, building CloudStore and building our product seemed to be one in the same, so we persisted.

Even as we moved over to our web application from the Chrome extension, we still feel bullish on the framework. We couldn’t think of a faster way to build our MVP of Visor given that everything needed to be synchronized and we had all of this knowledge about how to do it with CloudStore. Nonetheless, some of our questions about the long-term technical risks about using our own framework are yet unanswered.

Scaling Postgres

How will Postgres scale for us, given the added abstraction layer we created on top of it?

Right now, an object update operation takes 25-30ms on average. Storing new objects takes about 35ms per object. Loading a single object also takes about 35ms, while loading 10 objects and 12 edges in one sample took 177ms. (These times are server processing times — overhead by Django and the network are not included). Most of the time is spent transacting with Postgres.

On the plus side, the SQL queries generated by Django are still simple enough to be understood by humans. The amount of complexity of adding this “graph” abstraction on top of Postgres isn’t as costly as I would have anticipated. The object’s “type” column performs most of the heavy lifting. It’s rare that we query based on properties in the JSONB blob, and even when we have, it’s been plenty fast. And traversing the graph is just “hopping” joins by primary keys. Most load queries involve one inner join and one outer join.

If we needed to scale out, it seems we could shard our data according to the unique organization GUIDs for each team on the product. That will be a big project, but it’s a champagne problem.

Corner cases?

What if we encounter a corner case that requires too much engineering time to solve?

As one HackerNews commenter on one of Asana’s LundaDB posts pointed out:

“Get the main stuff done (wow, this looks like a cool idea), but soon you are treading in deep waters as soon as the technology start hitting the edge cases, and day to day usability.”

Oh boy, did we hit this. I’ve definitely carved out days at a time to track down gnarly bugs and handle corner cases. Most such issues we were able to handle easily and they at least felt tractable. At this point, we’ve handled a number of them and the rate at which they pop up has drastically been reduced to the point where bugs and issues are seldom a problem in CloudStore.

We have logged a few corner cases or use cases that would be harder to solve, but we’ve found ways to work around them. For example, we haven’t made it possible yet to run true graph queries (e.g. finding records by the values of properties of records they’re attached to at multiple levels of depth). We already solved our ‘search’ problem by replicating objects in an Elasticsearch database. Perhaps we can replicate our graph in a purpose-built graph database like Neo4J or Amazon Neptune for running these types of queries. Of course, maintaining consistency between the main Postgres database and the graph database would be tricky.

Limitations of transacting whole objects

For now, it’s only possible to bring down an object in its entirety. Objects are the smallest unit of loadable data. As a consequence, it’s not possible today to bring down just certain properties of an object, such as a user’s given name and family name without bringing down other user properties on the object, such as email address. I’ve identified two scenarios where this could be problematic for us:

One scenario is security and access control. For example, if we set up web pages that use CloudStore data with public access, and Users are loaded down (such as to show who is currently looking at the document), then the entire User object would need to be loaded, including their email address. It wouldn’t be possible just to load the user’s name without their email. It seems that Dropbox even recently suffered from just such a problem, where they exposed email addresses of users who viewed public documents.

Another scenario is for speed and memory management. If there is an object with a large JSON payload, it’s not possible to pull down just part of that object.

Perhaps in the future we could implement some additional load parameters that specify what properties of an object come down, inspired by how GraphQL works.

Memory management questions

One problem that arises with realtime data is that subscribing objects to receive updates from the server means holding references to those objects in a master map. That way, when the server says it has an update for an object, the object can be retrieved and updated. Unfortunately, that means that the garbage collector will never release these objects unless these subscriptions are explicitly disconnected from the master map.

That’s challenging, because it means we now have to manually manage garbage collection on these objects. This is an issue that isn’t a problem right now, given the scale of the subgraphs our users are pulling in. To be precise, I’m not aware of any memory leaks within the code that result from ordinary use of the product within one loaded Workbook. But over time, if a user switched from one massive Workbook to another, we’d need to find a way to unsubscribe them from objects pulled down from the previous workbook.

I’ve already built a proof-of-concept for a solution that attempts to tie CloudStore objects to Vue components that use them. This way, when the Vue component is unmounted, we can consider whether the objects linked to it still need to be subscribed to server updates. At the prototype stage, it works, but who knows how many corner cases will arise. And the risk of unsubscribing an object that’s still needed is very high — it could cause some very confusing user situations.

Team scaling issues

Asana also pointed out a concern of their own with Luna, which I share:

“We struggled to onboard new engineers because product development required understanding our framework. “

This one is concerning, because right now we’re optimizing for developer velocity on a small team, but as we scale, will we encounter difficulty explaining how CloudStore works to those who weren’t present as it was built? Will external engineers even want to work with a proprietary system that isn’t a transferable skill they can put on their resume? The best solution we have right now is well-documented code, a strong base of examples, using good patterns that are familiar (e.g. Firebase, Mongo), and some companion documentation. Perhaps some day the framework could become open source.

Migrations?

Another challenge for us is migrations. How do you migrate data in a graph database? Do we write a script? How long would such a script take to run? Must they be atomic? How do we maintain compatibility with clients running outdated versions of our client-side code that depend on the old schema?

Similarly, how do we manage migrations in JSONB fields? It’s likely similar in theory to migrating when using MongoDB, which I presume has more literature. As of now, it seems the answer is: “very carefully with a script.” A blog on the topic by Coinbase confirmed as much.

Final thoughts

We probably wouldn’t have started out building this framework if we weren’t initially targeting a Chrome extension with unique requirements. But even though we discarded a lot of code as we’ve iterated, most of the discarded code was a thin UI layer on top of the code we ended up writing inside the framework itself. In that sense, by building CloudStore, we reduced waste while we search for product market fit. All the work we did to solve certain hard problems has transferred with us. We’ve also gained velocity in our ability to build new features, which is a huge advantage. For such a small team, I’m proud of what we’ve brought to market thanks to this framework.

As proud as we are of the technical accomplishments we’ve achieved, ultimately, our user experience is the entire reason why we write code: to make delightful customer experiences that help our users achieve their goals faster and more easily. We think this technology has made our product better for our customers. It has given our product a snappy user experience. Our loads are fast and user interactions are instant.

As much as there are tradeoffs and challenges ahead as we grow with CloudStore, we’re excited to see how the framework evolves and — perhaps one day — how it may help other teams.